/brains

In collaboration with Cornell, Waterloo, and Stanford.

Brains on Chips

Deep Neural Networks have done some pretty impressive things. But they have become very distant from the biology they take inspiration from. Instead of a dynamic and adaptive system with intricate connections and continual feedback between pools of neurons, we’ve created these giant static monolithic matrices that through sheer high dimensionality found the closed-form equation for “hotdog/not-a-hotdog”.

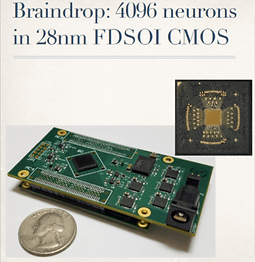

Braindrop was a two pronged effort. First, we sought to design a platform for engineers to design biologically inspired neural networks the Right Way™ — intelligently design adaptive brains that rely on clever systems architecture rather than throwing more neurons at it. This was achieved by expanding the Neural Engineering Framework (NEF) alongside the brilliant team in Waterloo.

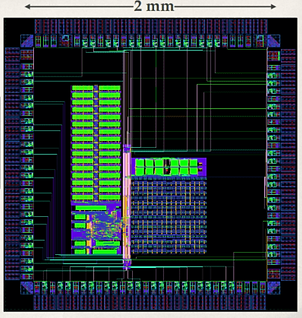

Next, we architected and taped out an ASIC that could reconfigure to any neural model built in NEF and run in real time using Leaky Integrate and Fire (LIF) neurons, just like a biological brain. Completing the holy trinity of opposing mainstream design methodologies, the architecture used analog circuits from Stanford, as well as asynchronous VLSI (computers without clocks) from my research team at Cornell.

The architecture supported a million neurons running simultaneously for a few watts of power. The equivalent running on a GTX980 farm (oops, I dated the project) would be 1.21 gigawatts. And we only mildly fudged the numbers for the Back To The Future reference.

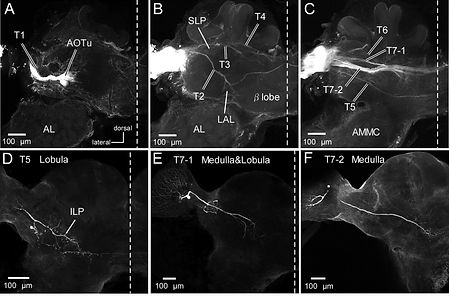

For a sense of scale, a cockroach brain is a million neurons. A cockroach seeks out food, solves navigation problems, communicates, and has complex motor functions. They have a drive for survival and reproduction. Arguably, they feel desire and fear. Dare I say love?

Now, my ego isn't quite big enough to claim our architecture could feel love. And if anything, it would have felt hatred. But it is a rock that we tricked into doing computations by carving microscopic runes into it. And we can make those computations look a lot like real biological brains. That's just about as fantastical to me.

Our neuromorphic chip offered higher performance, lower power, higher neural density, generalizability to a wider set of applications, higher reliability, and improved neural efficiency compared to traditional DNN-on-a-GPU Deep Learning. The main obstacles to mass adoption are now social rather than technological — we are asking circuit designers to give up the deeply ingrained design philosophies of digital and synchronous. And we are asking neural network designers to dive into the brains they build, moving around individual pools of neurons.

To chip away at the latter of these concerns, the Nengo project is an ongoing effort to bring the NEF into the age of dynamic languages and graphical IDEs. Waterloo has also been seeding the community with an annual Summer School program focused on NEF style neural network design.

As for asynchronous logic, it may just be a waiting game — to quote Intel’s keynote speaker at the QDI national conference, “You guys are correct. This is the right way to design circuits. And we will adopt it kicking and screaming after exhausting every other possible option.”